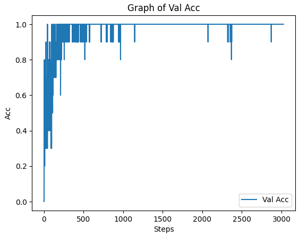

Second, I created an algorithm to determine the most distinct samples from the training set to set aside for the validation set.

Part of the competition rules was that the validation set comes from the training set and my new function allowed me to choose the most mathematically distinct samples to use.

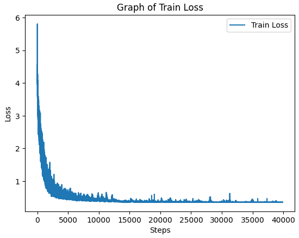

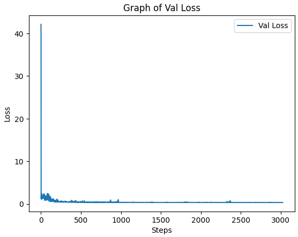

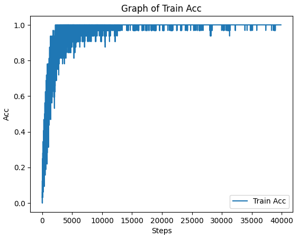

I also implemented a scheduler to run epochs until a minimum amount of improvement in validation accuracy occurs. I also decided to upgrade to Resnet-50.

Lastly, I doubled the total amount of samples by using data augmentation with more extreme augmentation and extended the set instead of just changing what already existed.

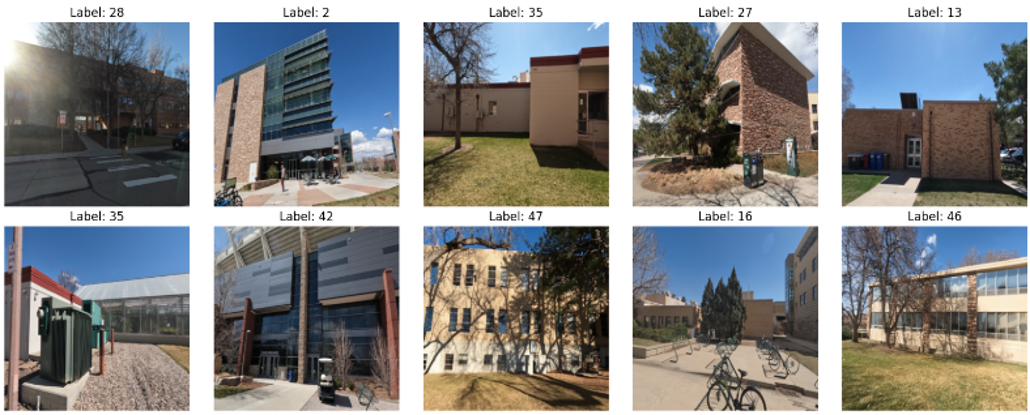

My initial accuracy was around 12-18% and these changes improved it to >40%. While this is not an impressive number on the surface using just one video of each building I was able to get my buidling classifer to correctly identify 4/10 CSU buildings from pictures it had never seen before.

This experience taught me a lot about training and using machine learning models and I intend to participate in the Spring 2025 competition.